|

1Beijing Institute of Technology 2SKL-IOTSC, CIS, University of Macau

|

|

3MEGVII Technology 4School of Computer Science, Wuhan University

|

|

5Beijing Academy of Artificial Intelligence

|

|

*Equal contribution. +Corresponding author.

|

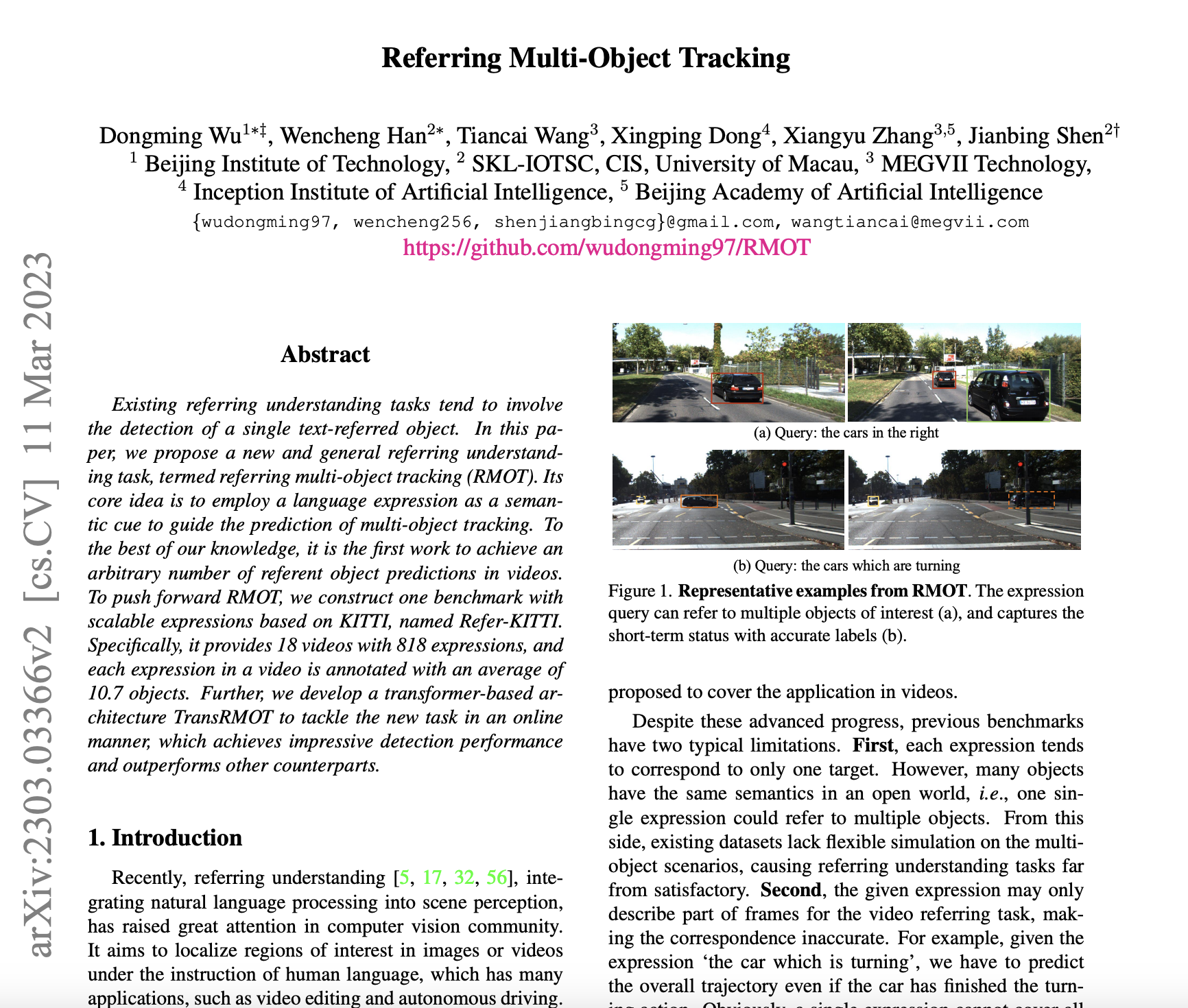

Existing referring understanding tasks tend to involve the detection of a single text-referred object. In this paper, we propose a new and general referring understanding task, termed referring multi-object tracking (RMOT). Its core idea is to employ a language expression as a semantic cue to guide the prediction of multi-object tracking. To the best of our knowledge, it is the first work to achieve an arbitrary number of referent object predictions in videos. To push forward RMOT, we construct one benchmark with scalable expressions based on KITTI, named Refer-KITTI. Specifically, it provides 18 videos with 818 expressions, and each expression in a video is annotated with an average of 10.7 objects. Further, we develop a transformer-based architecture TransRMOT to tackle the new task in an online manner, which achieves impressive detection performance and outperforms other counterparts.

|

|

|

Examples of Refer-KITTI. It provides high-diversity scenes and high-quality annotations referred to by expressions

|

|

Labeling exemplar of our datasets. The turning action is labeled with only two clicks on bounding boxes at the starting and ending frames. The intermediate frames are automatically and efficiently labeled with the help of unique identities.It provides high-diversity scenes and high-quality annotations referred to by expressions

|

|

The overall architecture of TransRMOT. It is an online cross-modal tracker and includes four essential parts: feature extractors, cross-modal encoder, decoder, and referent head. The feature extractors embed the input video and the corresponding language query into feature maps/vectors. The cross-modal encoder models comprehensive visual-linguistic representation via efficient fusion. The decoder takes the visual-linguistic features, detect queries and the track queries as inputs and updates the representation of queries. The updated queries are further used to predict the referred objects by the referent head.

|

|

Referring Multi-Object Tracking

Dongming Wu, Wencheng Han, Tiancai Wang, Xingping Dong, Xiangyu Zhang, Jianbing Shen [Paper] [Code] [Dataset] [Bibtex] |

Citationtitle={Referring Multi-Object Tracking}, author= {Wu, Dongming and Han, Wencheng and Wang, Tiancai and Dong, Xingping and Zhang, Xiangyu and Shen, Jianbing}, booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition}, pages={14633--14642}, year={2023}, } |

AcknowledgementsThis template was originally made by Phillip Isola and Richard Zhang for a colorful ECCV project; the code can be found here. |